Oct. 27, 2010 — University of Utah computer scientists developed software that quickly edits “extreme resolution imagery” – huge photographs containing billions to hundreds of billions of pixels or dot-like picture elements. Until now, it took hours to process these “gigapixel” images. The new software needs only seconds to produce preview images useful to doctors, intelligence analysts, photographers, artists, engineers and others.

By sampling only a fraction of the pixels in a massive image – for example, a satellite photo or a panorama made of hundreds of individual photos – the software can produce good approximations or previews of what the fully processed image will look like.

That allows someone to interactively edit and analyze massive images – pictures larger than a gigapixel (billion pixels) – in seconds rather than hours, says Valerio Pascucci, an associate professor of computer science at the University of Utah and its Scientific Computing and Imaging (SCI) Institute.

“You can go anywhere you want in the image,” he says. “You can zoom in, go left, right. From your perspective, it is as if the full ‘solved’ image has been computed.”

He compares the photo-editing software with public opinion polling: “You ask a few people and get the answer as if you asked everyone. It’s exactly the same thing.”

The new software – Visualization Streams for Ultimate Scalability, or ViSUS – allows gigapixel images stored on an external server or drive to be edited from a large computer, a desktop or laptop computer, or even a smart phone, Pascucci says.

“The same software runs very well on an iPhone or a large computer,” he adds.

A study describing development of the ViSUS software is scheduled for online publication Saturday, Oct. 30 in the world’s pre-eminent computer graphics journal, ACM Transactions on Graphics, published by the Association for Computing Machinery.

The paper calls ViSUS “a simple framework for progressive processing of high-resolution images with minimal resources … [that] for the first time, is capable of handling gigapixel imagery in real time.”

Pascucci conducted the research with University of Utah SCI Institute colleagues Brian Summa, a doctoral student in computing; Giorgio Scorzelli, a senior software developer; and Peer-Timo Bremer, a computer scientist at Lawrence Livermore National Laboratory in California, where co-author Ming Jiang also works.

The research was funded by the U.S. Department of Energy and the National Science Foundation. The University of Utah Research Foundation and Lawrence Livermore share a patent on the software, and the researchers plan to start a company to commercialize ViSUS.

From Atlanta to Atlantis – and Stitching Salt Lake City

Pascucci defines massive imagery as images containing more than one gigapixel -which is equal to 100 photos from a 10-megapixel (10 million pixel) digital camera.

In the study, the computer scientists used a number of images ranging in size from megapixels (millions of picture elements) to hundreds of gigapixels to test how well the ViSUS software let them interactively edit large images, and to show how well the software can handle images of various sizes, from small to extremely large.

In one example, they used the software to perform “seamless cloning,” which means taking one image and merging it with another image. They combined a 3.7-gigapixel image of the entire Earth with a 116-gigapixel satellite photo of the city of Atlanta, zooming in on the Gulf of Mexico and putting Atlanta underwater there.

“An artist can interactively place a copy of Atlanta under shallow water and recreate the lost city of Atlantis,” says the new study, which is titled, “Interactive Editing of Massive Imagery Made Simple: Turning Atlanta into Atlantis.”

“It’s just a way to demonstrate how an artist can manipulate a huge amount of data in an image without being encumbered by the file size,” says Pascucci.

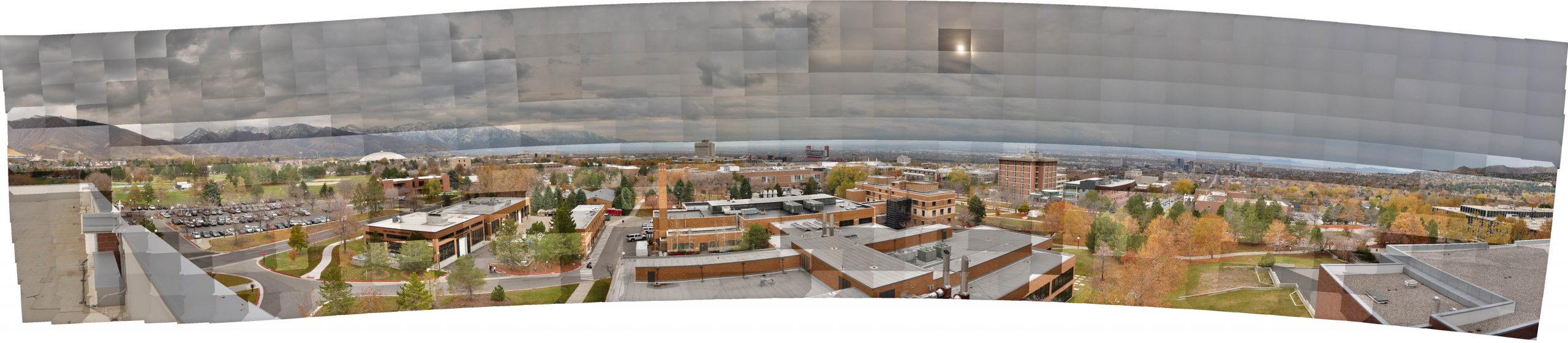

Pascucci, Summa and colleagues also used a camera mounted on a robotic panning device and placed atop a University of Utah building to take 611 photographs during a six-hour period. Together, the photos covered the entire Salt Lake Valley.

At full resolution, it took them four hours to do “panorama stitching,” which is stitching the mosaic of photos together into a 3.27-gigapixel panorama of the valley that eliminated the seams between the images and differences in their exposures, says Summa, first author of the study.

But using the ViSUS software, it took only two seconds to create a “global preview” of the entire Salt Lake panorama that looked almost as good – and had a relatively low resolution of only 0.9-megapixels, or only one-3,600th as much data as full-resolution panorama.

And that preview image is interactive, so a photo editor can make different adjustments – such as tint, color intensity and contrast – and see the effects in seconds.

Pascucci says ViSUS’ significance is not in creating the preview, but in allowing an editor to zoom in on any part of the low-resolution panorama and quickly see and edit a selected portion of it at full resolution. Older software required the full resolution image to be processed before it could be edited.

Uses for Quick Editing of Big Pictures

Pascucci says the method can be used to edit medical images such as MRI and CT scans – and can do so in three dimensions, even though their study examined only two-dimensional images. “We can handle 2-D and 3-D in the same way,” he says.

The software also might lead to more sophisticated computer games. “We are studying the possibility of involving the player in building their own [gaming] environment on the fly,” says Pascucci.

The software also will be useful to intelligence analysts examining satellite photos, and researchers using high-resolution microscopes, for example, to study how the eye’s light-sensing retina is “wired” by nerves, based on detailed microscopic images.

An intelligence analyst may need to compare two 100-gigabyte satellite photos of the same location but taken at different times – perhaps to learn if aircraft or other military equipment arrived or left that location between the times the photos were taken.

Conventional software to compare the photos must go through all the data in each photo and compare differences – a process that “would take hours. It might be a whole day,” Pascucci says. But with ViSUS, “we quickly build an approximation of the difference between the images, and allow the analyst to explore interactively smaller regions of the total image at higher resolution without having to wait.”

How it Works: Catching Some Zs

Pascucci says two key parts of the software must work together delicately:

- “One is the way we store the images – the order in which we store the pixels on the disk. That is part of the technology being patented” because the storage format “allows you to retrieve the sample of pixels you want really fast.“

- How the data are processed is the software’s second crucial feature. The algorithm – a set of formulas and rules – for processing image data allows the researchers to use only a subset of pixels, which they can move efficiently.

The image processing method can produce previews at various resolutions by taking progressively more and more pixels from the data that make up the entire full-resolution image.

Normally, the amount of memory used in a computer to edit and preview a massive image would have to be large enough to handle the entire data set for that image.

“In our method, the preview has constant size, so it can always fit in memory, even if the fine-resolution data keep growing,” Pascucci says.

Data for the full-resolution image is stored on a disk or drive, and ViSUS repeatedly swaps data with the disk as needed for creating new preview images as editing progresses. The software does that very efficiently by pulling more and more data subsets from the full image data in the form of progressively smaller Z-shaped sets of pixels.

Pascucci says ViSUS’ major contribution is that “we don’t need to read all the data to give you an approximation” of the full image.

If an image contained a terabyte of data – a trillion bytes – the software could produce a good approximation of the image using only one-millionth of the total image data, or about a megabyte, Pascucci says.

The computer scientists now have gone beyond the 116-gigapixel Atlanta image and, in unpublished work, have edited satellite images of multiple cities exceeding 500 gigapixels. The next target: a terapixel image – 1,000 gigapixels or 1 trillion pixels.

For more information on the Scientific Computing and Imaging Institute, see:

http://www.sci.utah.edu

For information on the University of Utah College of Engineering, see:

http://www.coe.utah.edu